Enhancing Language Models Using RAG Architecture in Azure AI Studio

Also posted here Enhancing Language Models Using RAG Architecture in Azure AI Studio

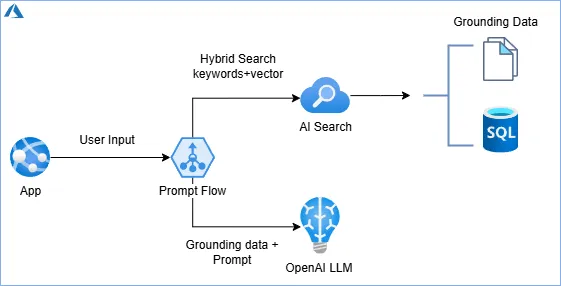

In this guide, we’ll walk you through the process of enhancing language models using RAG architecture in Azure AI Studio. Retrieval-Augmented Generation (RAG) enhances Large Language Model (LLM) capabilities, like those of GPTs, by integrating an information retrieval system. This addition grounds data and controls the context for the LLM’s response generation.

From Ungrounded to Grounded Prompts

A pre-trained language model uses its training data to answer prompts. However, this data might not align with the prompt’s context. Grounding a prompt with relevant data transforms how a language model responds, making them more contextualized and accurate.

Introducing Azure AI Studio

Azure AI Studio improves AI application development by providing a unified platform combining the capabilities of Azure AI Services, Azure AI Search, and Azure Machine Learning. This integration helps developers to effortlessly build, manage, and deploy AI applications. With Azure AI Studio, you can easily deploy a language model, import data, and integrate with services like Azure AI Search.

Deploying a RAG Solution with Azure AI Studio

In this section, I explain how to deploy a RAG solution using Azure AI Studio. Please note that some features are still in preview at the time of writing this post and may have changed.

Solution Components

The solution comprises the following components.

- Data files that provide the interaction context.

- GPT 3.5 LLM for human-like chat integrations.

- A Text Embedding Model to convert content into searchable vectors.

- Azure AI Search for vector and keyword-based searches.

Implementing the RAG pattern in Azure AI Studio involves creating prompt flow that define the interaction sequence with the AI Models. The Prompt flow takes in user input, execute one or more steps (or tools), and ultimately produce an output. These tools involve preparing a prompt, searching for grounding data, and submitting a prompt to the language model to receive a response.

The steps below demonstrate how to set up the solution in Azure AI Studio.

Step 1: Getting Started with Azure AI Studio

Create a Hub: Go to https://ai.azure.com/ and create a hub. The hub connects Azure services like Azure AI Service/Azure OpenAI Service and Azure AI Search. It provisions a keyvault for secrets and a storage account for data. The hub stores shared components such as model deployments, service connections, compute, and users.

Setting Up AI Project: After setting up the hub, create a new project. This AI Project houses all artifacts like data, models, and prompt flows.

Step 2: Model Deployment

We are using two models in this solution:

GPT Model: We will deploy the gpt-35-turbo-16k model from the model catalog for natural language chat experience.

Embedding Model: To generate vector indexes for the data and perform AI Search, we will deploy the text-embedding-ada-002 model from the model catalog.

To deploy the models, navigate to the model catalog from the left navigation under the Get Started section, search for and select the desired model, and click on deploy. Set the tokens per minute according to the requirements.

Step 3: Prepare and Index Data

Choose a suitable dataset that aligns with your use case. This dataset will be used for information retrieval during model inference.

Upload Grounding Data: Navigate to the Data section under Components, select Upload file, and choose the grounding data.

Index Creation: After uploading, go to Indexes under Components, create a new index, select the previously uploaded data, and choose the existing Azure AI Search and an index name.

Vector Search Configuration: Finally, select the vector search settings and the embedding model deployed for vector generation.

Step 4: Integration with RAG

Implement the RAG pattern within your architecture. This involves configuring retrieval mechanisms and integrating them seamlessly with the generative part of the model. This is done using a prompt flow in Azure AI Studio.

Create a new prompt flow by clicking on the ‘Prompt Flow’ option in the left navigation under the Tool section. Clone the “Q & A on your data” sample from the gallery, which will generate a flow as shown here.

Configure prompt flow tools

First, start a runtime using the ‘Start compute session’ button. Select each tool to connect to the models and indexes previously setup.

Select the lookup tool and connect it to the vector index by choosing ‘mlindex_content’ as the registered index and selecting the index created earlier. Set the query type to ‘hybrid (vector+keyword)’.

Review the Python code that retrieves results from the AI Search in the lookup step/tool and concatenates them into a content and source array JSON string.

Examine the prompt step and modify the prompt as necessary. Be aware of the prompt variants that can be applied based on conditions such as the output from previous tools or the user input value.

Submit the prompt to the language model in the last step. Update the connection to link to the gpt-35-turbo-16k model and set the response type as text. Optionally, adjust the temperature value to control the creativity level of the model’s response.

Save the steps once completed. To test the flow, type a question in the input textbox and run it.

Step 5: Deploying Your Enhanced Language Model

Once satisfied with the flow’s performance, deploying is as simple as clicking the deploy button. This action makes the prompt available for app consumption using endpoint and API keys.

Conclusion

By following this step-by-step guide, you’ve learned how to enhance language models using RAG architecture in Azure AI Studio. Leveraging the power of RAG allows your models to retrieve and generate information effectively, making them smarter and more responsive to user queries. By using Azure AI Studio’s integrated ecosystem, AI application development process is significantly streamlined. This guide also demonstrates Azure AI Studio’s capability of rapidly producing innovative AI solutions.